One of the key design decisions we made in developing pAI-OS was that the frontend/s (by default, a single page web application written in React) and backend (a Python Flask app) are separate services. This separation of concerns allows us to have a more fine-grained control over the API security, and to be able to use the tools and techniques we already know best for each layer. By being strict about not using private APIs, you can be sure that all the features and functions you see in any interface are available to all of them.

In the ever-evolving landscape of API security, ensuring that all requests and responses are compliant with predefined specifications is increasingly important in a multi-layer defense strategy. At pAI-OS, we have adopted OpenAPI and "pattern" regexps with Connexion to achieve this goal without having to rely on third-party services (though you can upload your OpenAPI spec to providers like Cloudflare who will enforce it before requests even reach your server!).

Why OpenAPI and Connexion?

OpenAPI is a powerful specification for defining APIs, allowing us to describe our API endpoints, request/response formats, and validation rules in a standardized way. Connexion is a Python framework that automates the validation of requests and responses against an OpenAPI specification. By integrating these tools, we ensure that our API adheres to strict validation rules, reducing the risk of security vulnerabilities and improving overall reliability. This can exact a small performance penalty, but given this is an administrative interface rather than one that's invovled in user requests, the trade-off is worth it.

Using "pattern" Regular Expressions for Validation

One of the key features we leverage in our OpenAPI spec is the use of "pattern" regexps. These regular expressions allow us to define precise validation rules for various parts of our API, such as path parameters, query parameters, and request bodies. By specifying patterns, we can enforce constraints on the data being sent to and from our API, ensuring it meets our security and format requirements.

Examples

Filenames

pAI-OS is designed to be cross platform, but different platforms accept different characters in filenames (and use different path formats too, which is why use pathlib.Path internally). We also use the following regexp to ensure that filenames are valid on macOS, Windows, and Linux:

fileName:

type: string

description: A filename that is valid on macOS, Windows, and Linux

example: Mistral-7B-Instruct-v0.3-Q8_0.gguf

pattern: '^[^<>:;,?"*|/]+$'

UUIDs

For scalability and security, we don't let users define the id of objects they create (via POST) or update (via PUT). Instead, we generate UUIDs for them. This way we can ensure that the id of an object is a valid UUID, that it follows the correct format, and that it's unique even if it's generated by one of several servers.

Rather than hoping users only ever send us valid UUIDs, we can be sure that they will by using the pattern regexp:

uuid4:

type: string

format: uuid

example: 7bea4732-214f-40e7-9161-4e7241a2b97e

pattern: ^[0-9a-f]{8}-[0-9a-f]{4}-4[0-9a-f]{3}-[89ab][0-9a-f]{3}-[0-9a-f]{12}$

Emails

For a more complex example one need look no further than an almost 100% RFC-compliant email regex:

email:

type: string

format: email

example: [email protected]

pattern: (?:[a-z0-9!#$%&'*+/=?^_`{|}~-]+(?:\.[a-z0-9!#$%&'*+/=?^_`{|}~-]+)_|"(?:[\x01-\x08\x0b\x0c\x0e-\x1f\x21\x23-\x5b\x5d-\x7f]|\\[\x01-\x09\x0b\x0c\x0e-\x7f])_")@(?:(?:[a-z0-9](?:[a-z0-9-]*[a-z0-9])?\.)+[a-z0-9](?:[a-z0-9-]*[a-z0-9])?|\[(?:(?:25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)\.){3}(?:25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?|[a-z0-9-]\*[a-z0-9]:(?:[\x01-\x08\x0b\x0c\x0e-\x1f\x21-\x5a\x53-\x7f]|\\[\x01-\x09\x0b\x0c\x0e-\x7f])+)\])

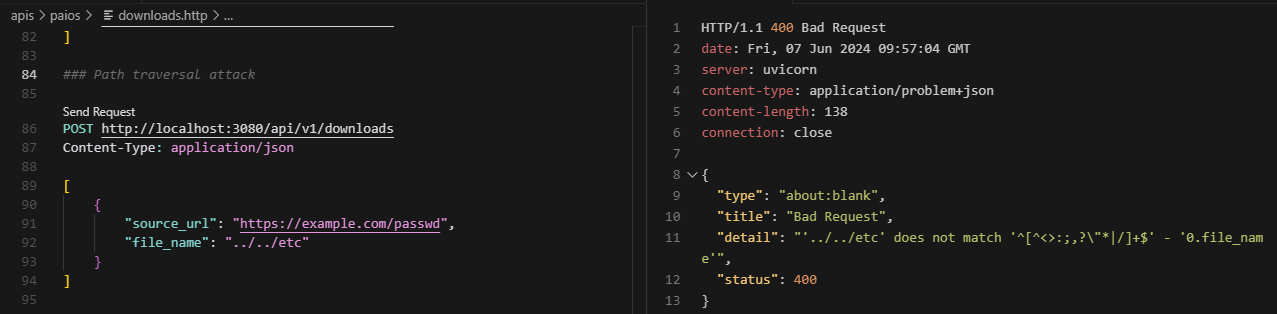

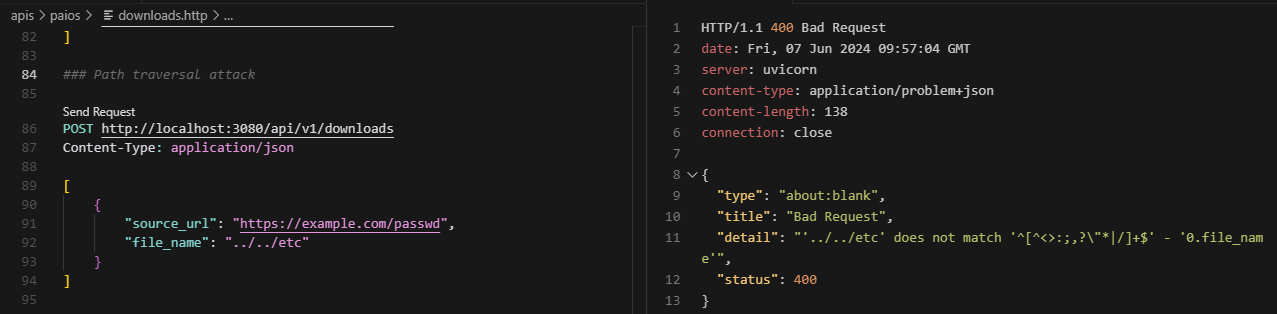

Path Traversal Example

For example, here Connexion has intercepted an attempt at executing a path traversal vulnerability before reaching the rest of our code:

Conclusion

In summary, by using OpenAPI and Connexion, we can ensure that our API is secure and compliant with the latest standards. This approach allows us to have a more fine-grained control over the API security, albeit with a small performance penalty. By being strict about not using private APIs, you can be sure that all the features and functions you see in any interface are available to all of them.